The Open Transprecision Computing project OPRECOMP supported by the European Union’s H2020-EU.1.2.2. – FET Proactive research and innovation programme under grant agreement #732631 supported and funded 10 Open Source Projects on Transprecision Computing that have been selected by 10 reviewers after a Summer of Code Call.

Our goal was to raise awareness, and provide examples for transprecision principles which show an improvement of efficiency without significant degradation in the quality to a wider community. We wanted projects including:

- Software, kernels, and algorithms implementations using existing hardware systems (CPUs, GPGPUs, Microcontrollers)

- Applications ported to platforms developed by OPRECOMP members. In OPRECOMP we are working on transprecision systems covering a wide range of application domains from IoT to HPC. Proposals that plan to develop applications on these platforms were also welcome.

- Novel HW architectures implemented on FPGAs or ASICs to better support transprecision computing

Seven of them have been presented at the Summer School in Perugia, in September 2019:

- Time series analysis using transprecision computing, Ivan Fernandez Vega

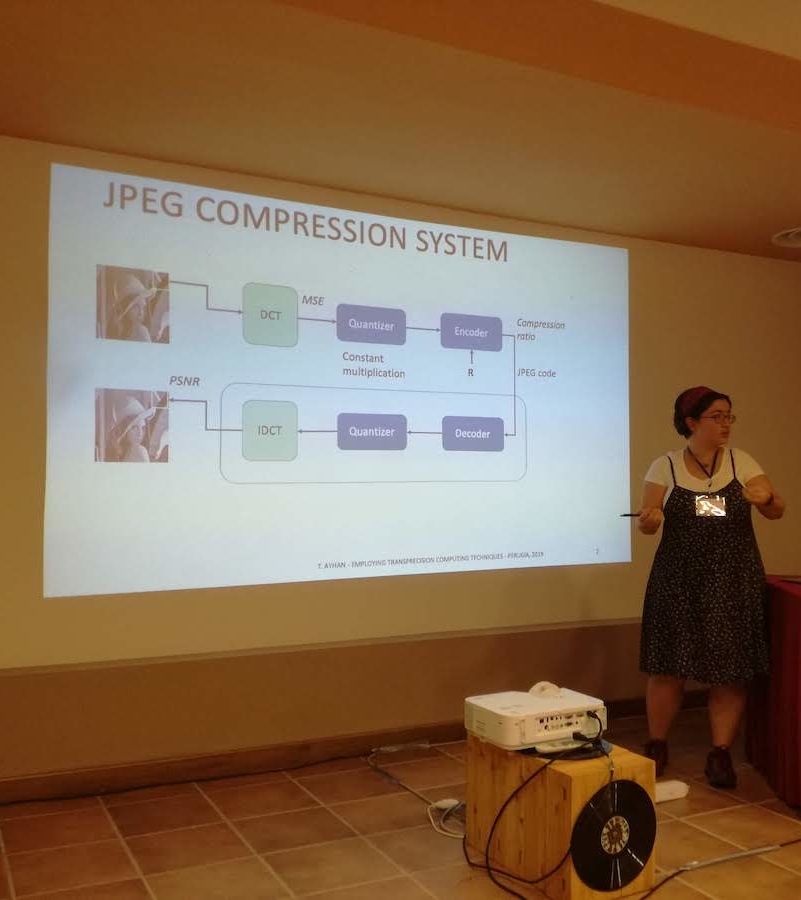

- Employing Transprecision Computing Techniques on JPEG Compression System, Tuba Ayhan

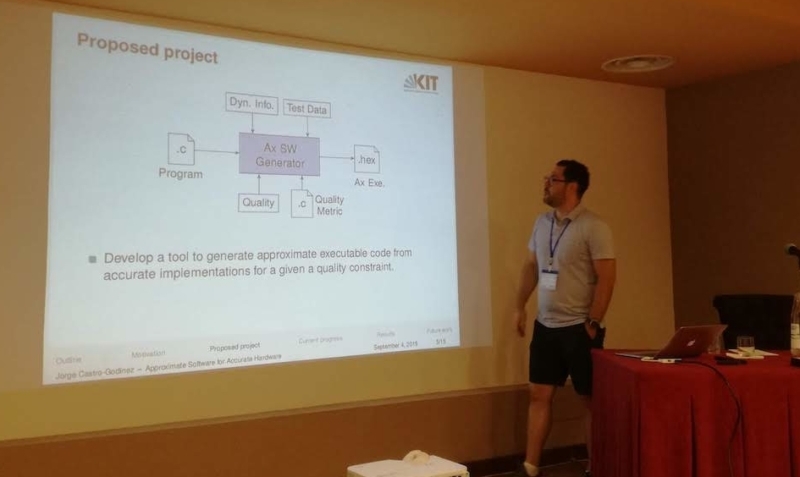

- Approximate Software for Accurate Hardware, Jorge Castro-Godínez

- Design and implementation of an efficient Floating Point Unit (FPU) in the IoT era, Davide Zoni

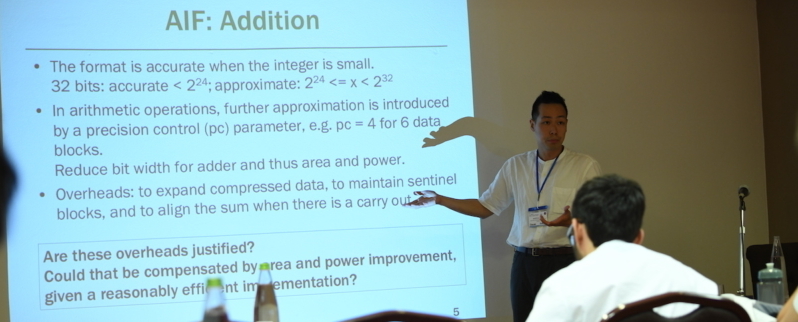

- Circuit Design and Analysis for Approximate Integer Format, Matthew Tang

- Value Range Analysis and Feedback-Driven Optimization for a Mixed Precision Compiler, Daniele Cattaneo

- Approximate Computing with Unreliable Memory: FPGA-Based Emulation for PULP, Marco Widmer

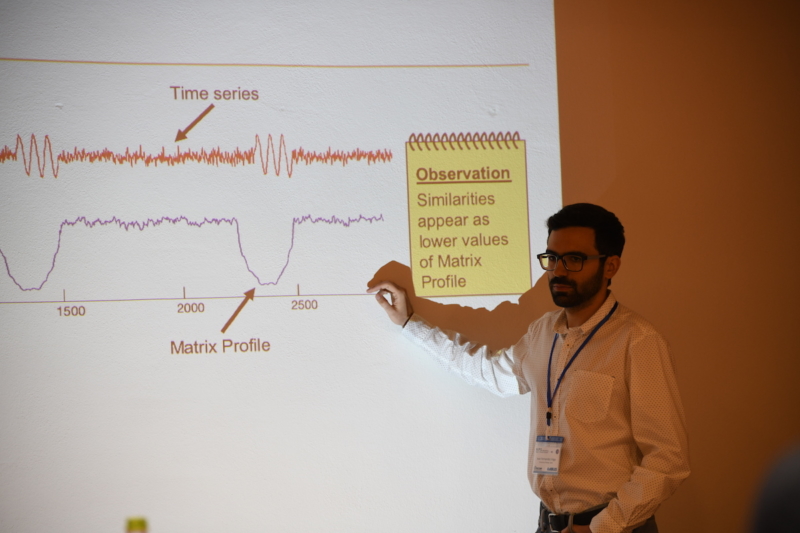

Time series analysis using transprecision computing – Ivan Fernandez Vega

Time series analysis is an example of data-intensive applications and an important research topic of great interest in many fields. Concretely, motif discovery in time series has a huge interest in many fields as biology, seismology, traffic prediction and energy conservation. Recently, a new algorithm presented for this task has begun to be the state-of-the-art in this task, known as the Matrix Profile. This is a technique that brings the possibility of obtaining exact motifs from a time series, which can be used to infer events, predict outcomes, detect anomalies and more.

However, current implementations of Matrix Profile use the same precision for all calculations without taking into account the data nature or the user accuracy requirements.

This results on over-precision calculus in a wide range of scenarios. In this way, transprecision computing could be a successful approach for getting accurate enough results reducing energy consumption and resources usage.

This project aims to develop a transprecision version of the latest Matrix Profile implementation and test it using a RISC-V based architecture. Concretely, the project will focus on the SCRIMP implementation of Matrix Profile.

The energy efficiency of a signal processing system should be evaluated by taking into account all components of the system: from data acquisition to data storage. Moreover, the quality of processing result depends on different parameters: from the quality of sensors to precision of digital processing units.

This project aims to demonstrate this holistic approach on a simple image processing application using transprecision computing paradigm.

For development and demonstration, JPEG compression system is chosen as an application, because the quality of result metrics (compressed image PSNR) can be traded-off for energy saving. This trade-off can be linked with either level of the system:

- in the algorithm level by setting the compression ratio

- in the digital processing level by determining the precision of the arithmetic computation units. Moreover, digital processing precision can as well be determined depending on the compression ratio.

This application enables us to evaluate two key components of the transprecision paradigm, which are energy-efficient hardware design and application oriented system analysis. Therefore, the proposed project becomes two fold.

Firstly, approximate arithmetic circuit design opportunities for posit number format1 will be explored. It is a recently proposed alternative arithmetic extended from unum number format. Adders and multipliers using posit will be implemented on FPGA. Their power consumption will be estimated and compared to conventional and approximate arithmetic units’. Effect of reducing precision on these arithmetic circuits’ power consumption will be figured.

Secondly, the system architecture and the precision of arithmetic units in the chosen application will be determined using transprecision computing ideas. For the decided compression ratio and precision, the digital JPEG compression block will be implemented on two platforms: FPGA and GAP8. For FPGA implementation, unum formatted arithmetic blocks will be used so that the first fold of the project would be exhibited on an application.

Approximate Software for Accurate Hardware – Jorge Castro-Godínez

The need to keep increasing the performance in computing systems, and the raise of physical challenges to achieve it, has motivated the comming forth of new design paradigms and computer architectures. Approximate and Transprecision Computing have emerged as novel design paradigms suitable to applications with inherent error resilience.

By accepting good enough results caused by imprecise calculations, e.g., in image and video processing where the human perception plays a major role, the computational quality (accuracy of results) is traded-off to reduce the required computational effort (execution time, area, power, or energy) as techniques developed under these paradigms are applied.

Besides many approaches have proposed the adoption of inexact or precision-reduced hardware to exploit this error tolerance, many exact processors can profit performace improvement for these types of applications by executing approximate versions of them.

In this project proposal, a compiler-supported methodology is proposed to generate approximate software for exact hardware.

As capabilities of IoT devices soar and prices plummet, sensors and gadgets are digitizing lots of information that was previously unavailable.

However, the quantity of such digitized data grows faster than the computational capacity to process them, thus highlighting the so-called data deluge problem. In this scenario, the adoption of novel processing techniques, i.e., machine learning algorithms, and novel approaches to shape computing infrastructures, i.e., edge-computing paradigm, is imperative.

The edge computing paradigm emerged to face the data deluge problem by enforcing a first processing pass on the digitized data as close as possible to the acquiring IoT node. Such processing pass allows extracting critical data features from the raw data with a net save of storage capacity and computational power in the following computational stages.

In addition, the IoT and Cyber Physical Systems (CPS) are expected to deliver enough computational power to sustain augmented reality, autonomous driving and computer graphics algorithms, as mesh processing, scene mapping or stereo matching, to enhance the end-user experience at run-time. Such computing requirements are still subject to strict energy efficiency constraints due to the battery-powered nature of the majority of the smart objects employed in the edge computing scenarios.

To meet such contrasting requirements we need to revise the architectural and microarchitectural design of the IoT and CPS platforms. In particular, the floating point representation of the numbers within the feature extraction algorithms represents a key design knob to trade the quality of the estimates and the computational efficiency and has been extensively investigated in the state-of-the-art.

This research aims to design and validate the b16FPU, a 16-bit floating point unit (FPU) that remains compliant with the BFLOAT16 specification document. Such component represents a key alternative with respect to the single precision floating point units made available by well-known open-hardware projects.

The design will be FPGA-optimized and the resulting b16FPU component will provide an interface to ease its integration within any open-hardware RISC-based solution. In particular, the entire design project focuses on three aspects:

- Flexibility and easy to use

- Assessment and mesurable criteria

- Permissive open-hardware license

Circuit Design and Analysis for Approximate Integer Format – Matthew Tang

This project intends to design and implement an open source arithmetic unit based on Approximate Integer Formats.

The arithmetic unit, which at least supports basic arithmetic, will be embedded with other open hardware cores and then be evaluated in error resilient applications, to analyze the actual accuracy-power trade-off achievable on programmable SoC boards.

Its design and RTL models will be made open under the CERN Open Hardware License in a public GitHub repository such that it becomes a useful resource for other researchers to evaluate, compare and further develop hardware for approximate computing.

This project proposes a compiler-level framework to convert floating point code to fixed point equivalent code. This approach already proved to bring benefits both in the Embedded Systems and in the High Performance Computing domain.

The plan to extend our previous work on Tuning Assistant for Floating point to Fixed point Optimizations (TAFFO) to improve its effectiveness and automation.

The workplan involves the development from scratch of two compiler passes, the improvement of an already-existing compiler analysis, and the integration of them into our framework.

The goal is to assess the effectiveness of the work with an approximate computing benchmark suite, both on HPC-like and on embedded systems platforms.

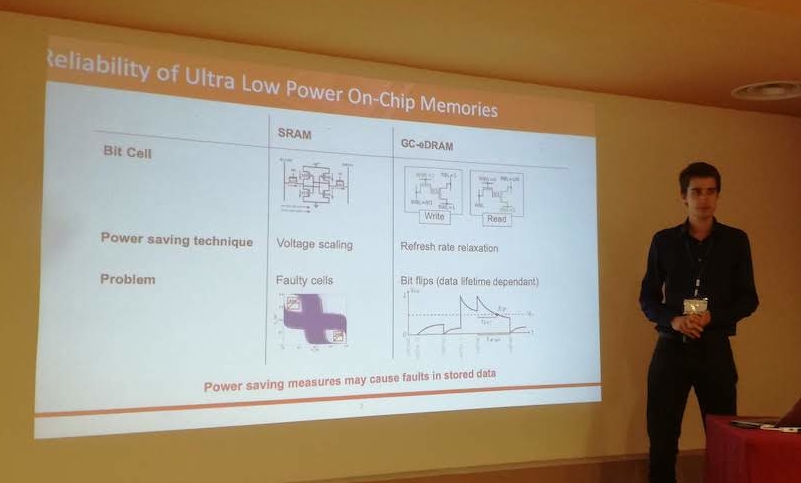

Gain-Cell embedded DRAM (GC-eDRAM) is a cost and energy efficient, high-density alternative to embedded SRAM. Unfortunately, this new type of memory requires a periodic refresh according to the retention time of the worst-case bit-cell in the array, which is typically orders of magnitude shorter than that of the majority of the cells.

By relaxing the refresh rate below the critical limit, significant power savings and better memory availability can be obtained, but applications need to accept that data storage is no longer 100% reliable. Since the number of errors increases only slowly when relaxing the refresh rate, interesting tradeoffs between energy, memory availability, and precision (i.e., quality) can be obtained.

To evaluate these tradeoffs, extensive Monte-Carlo simulations are necessary which include an accurate fault-model of the memory. To enable this type of simulations, an FPGA emulator for GC-eDRAMs has been developed in our prior work. In this project, we propose to integrate and test this GCeDRAM emulation into the PULP architecture.

With this proposal, we demonstrate an interesting flavor of trans-precision computing that is based on the idea of computing with unreliable, but more energy-

and area-efficient memories.